Remote Embodiment for Shared Activity

Allowing others to see and understand us

When we interact with others at a distance, we are often limited to a shared artefact (e.g. a document). We can augment this using Skype, adding both voice and audio, though frequently, this is not enough – it is easy to misunderstand what someone is saying, or lose sight of what the other person is doing with respect to that document. What is missing is an embodiment of the remote collaborator – that is, we cannot see their bodies, and those bodies’ relationship with the shared artefact or space. In this project, we are exploring how different ways of embodying remote participants can help support effective interaction.

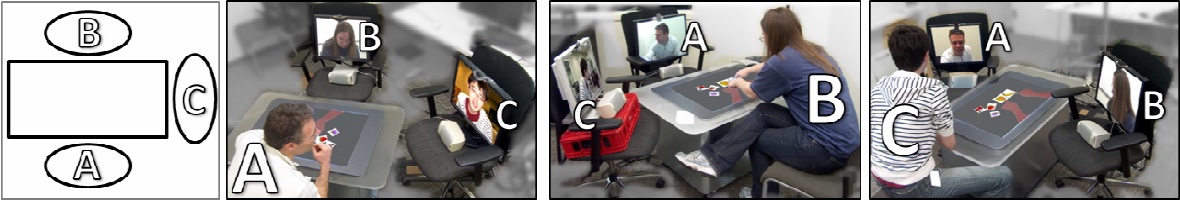

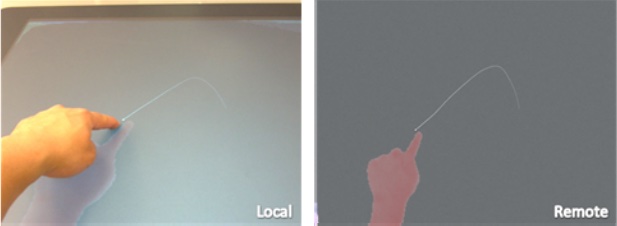

VideoArms. Many of our explorations focus on embodying a remote participants’ arms and hands in a shared visual workspace (e.g. (Genest, Gutwin, Tang, Kalyn, & Ivkovic, 2013; Yarosh, Tang, Mokashi, & Abowd, 2013; Tang, Pahud, Inkpen, Benko, Tang, & Buxton, 2010; Tang, Genest, Shoemaker, Gutwin, Fels, & Booth, 2010; Tang, Neustaedter, & Greenberg, 2007; Tang, Boyle, & Greenberg, 2005; Tang & Greenberg, 2005; Tang, Neustaedter, & Greenberg, 2004)). Arms are important as they provide a rich means of expressing intent – both intentionally (e.g. when we explicitly point at things), and unintentionally (e.g. when we are simply working, our arms touch the things we care about). We have designed new ways of capturing and visualizing different characteristics of these arms (e.g. using the Kinect to capture height information (Genest, Gutwin, Tang, Kalyn, & Ivkovic, 2013)), and demonstrated that they can be effective in three-way interaction (Tang, Pahud, Inkpen, Benko, Tang, & Buxton, 2010). We have also explored how the fidelity of the representation can be used for expressive purposes (Tang, Genest, Shoemaker, Gutwin, Fels, & Booth, 2010).

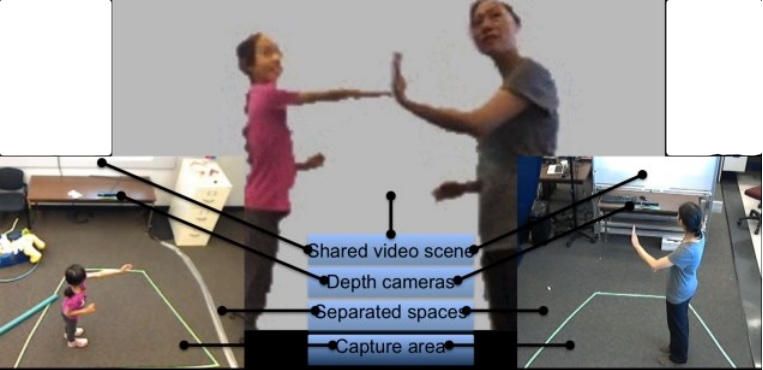

Children and Video. In designing systems for supporting interaction between children, we have found that simply showing video of a person’s head (for example, through Skype) is frequently not enough. Instead, it is useful to scaffold the interaction, for instance by focusing on interaction with a tabletop (Yarosh, Tang, Mokashi, & Abowd, 2013; Yarosh, Tang, Mokashi, & Abowd, 2013), or by allowing for full-body play and interaction with digital objects (Cohen, Dillman, MacLeod, Hunter, & Tang, 2014; Hunter, Maes, Tang, & Inkpen, 2014).

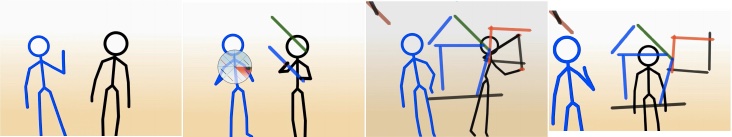

Bodily Representation. We have designed several systems to explore how full-body representation can be used for various applications. With OneSpace (Ledo, Aseniero, Greenberg, Boring, & Tang, 2013; Cohen, Dillman, MacLeod, Hunter, & Tang, 2014; Dillman & Tang, 2013; Ledo, Aseniero, Boring, Greenberg, & Tang, 2013; Ledo, Aseniero, Boring, & Tang, 2012), we explored high-fidelity video representation, and how that impacts interaction. In a separate project involving art therapy, we explored the use of simple stickman representations to protect the identity of participants (Jones, Hankinson, Collie, & Tang, 2014). We have also been exploring a complete absence of visual representation, exploring the possibility of using vibrotactile, haptic representation (Alizadeh, Tang, Sharlin, & Tang, 2014)

Publications

Seth Hunter, Pattie Maes, Anthony Tang, and Kori Inkpen. (2014). WaaZaam! Supporting Creative Play at a Distance in Customized Video Environments. In CHI ’14 Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, ACM, 1197–1206. (conference).

Acceptance: 22.8% - 471/2064. Notes: Honourable Mention - Top 5% of all submissions.

Maayan Cohen, Kody Dillman, Haley MacLeod, Seth Hunter, and Anthony Tang. (2014). OneSpace: Shared Visual Scenes for Active Freeplay. In CHI ’14 Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, ACM, 2177–2180. (conference).

Acceptance: 22.8% - 471/2064. Notes: Honourable Mention - Top 5% of all submissions.

Hesam Alizadeh, Richard Tang, Ehud Sharlin, and Anthony Tang. (2014). Haptics in Remote Collaborative Exercise Systems for Seniors. In CHI EA ’14: CHI ’14 Extended Abstracts on Human Factors in Computing Systems, ACM, 2401–2406. (poster).

Acceptance: 49% - 241/496. Notes: 6-page abstract + poster..

Brennan Jones, Sara Prins Hankinson, Kate Collie, and Anthony Tang. (2014). Supporting Non-Verbal Visual Communication in Online Group Art Therapy. In CHI EA ’14: CHI ’14 Extended Abstracts on Human Factors in Computing Systems, ACM, 1759–1764. (poster).

Acceptance: 49% - 241/496. Notes: 6-page abstract + poster..

(video).

Notes: People’s choice award.

Kody Dillman and Anthony Tang. (2013). Towards Next-Generation Remote Physiotherapy with Videoconferencing Tools. Department of Computer Science, University of Calgary. (techreport).

David Ledo, Bon Adriel Aseniero, Saul Greenberg, Sebastian Boring, and Anthony Tang. (2013). OneSpace: shared depth-corrected video interaction. In CHI EA ’13: CHI ’13 Extended Abstracts on Human Factors in Computing Systems, ACM, 997–1002. (poster).

Aaron M. Genest, Carl Gutwin, Anthony Tang, Michael Kalyn, and Zenja Ivkovic. (2013). KinectArms: a toolkit for capturing and displaying arm embodiments in distributed tabletop groupware. In CSCW ’13: Proceedings of the 2013 conference on Computer supported cooperative work, ACM, 157–166. (conference).

Acceptance: 35.5% - 139/390.

Svetlana Yarosh, Anthony Tang, Sanika Mokashi, and Gregory D. Abowd. (2013). "Almost touching": parent-child remote communication using the sharetable system. In CSCW ’13: Proceedings of the 2013 conference on Computer supported cooperative work, ACM, 181–192. (conference).

Acceptance: 35.5% - 139/390.

David Ledo, Bon Adriel Aseniero, Sebastian Boring, Saul Greenberg, and Anthony Tang. (2013). OneSpace: Bringing Depth to Remote Interactions. In Future of Personal Video Communications: Beyond Talking Heads - Workshop at CHI 2013. (Oduor, Erick and Neustaedter, Carman and Venolia, Gina and Judge, Tejinder, Eds.) (workshop).

David Ledo, Bon Adriel Aseniero, Sebastian Boring, and Anthony Tang. (2012). OneSpace: Shared Depth-Corrected Video Interaction. Department of Computer Science, University of Calgary, Department of Computer Science, University of Calgary, Calgary, Alberta, Canada T2N 1N4. (techreport).

Anthony Tang, Aaron Genest, Garth Shoemaker, Carl Gutwin, Sid Fels, and Kellogg S. Booth. (2010). Enhancing Expressiveness in Reference Space. In New Frontiers in Telepresence - CSCW 2010 Workshop. (Venolia, Gina and Inkpen, Kori and Olson, Judith and Nguyen, David, Eds.) (workshop).

Anthony Tang, Michel Pahud, Kori Inkpen, Hrvoje Benko, John C. Tang, and Bill Buxton. (2010). Three’s company: understanding communication channels in three-way distributed collaboration. In CSCW ’10: Proceedings of the 2010 ACM conference on Computer supported cooperative work, ACM, 271–280. (conference).

Notes: Best Paper Nominee (top 5% of submissions).

Anthony Tang, Carman Neustaedter, and Saul Greenberg. (2007). Videoarms: embodiments for mixed presence groupware. In People and Computers XX—Engage, Springer London, 85–102. (conference).

Anthony Tang and Saul Greenberg. (2005). Supporting Awareness in Mixed Presence Groupware. In Awareness Systems: Known Results, Theory, Concepts and Future Challenges - Workshop at CHI 2005. (Markopoulos, Paulos and Ruyter, Boris De and Mackay, Wendy, Eds.) (workshop).

Anthony Tang, Michael Boyle, and Saul Greenberg. (2005). Understanding and mitigating display and presence disparity in mixed presence groupware. Journal of research and practice in information technology 37, 2: 193–210. (journal).

Notes: Invited article.

Anthony Tang, Carman Neustaedter, and Saul Greenberg. (2004). VideoArms: supporting remote embodiment in groupware. In Video Proceedings of CSCW 2004. (video).