Visual Interaction Cues in Video Games for AR

Posted 20 Mar 2018

Video games use a visual language for guiding players through the virtual worlds that they embody. For example, a player might see a blinking arrow to tell him/her which way to move his/her avatar through the game – something we call a visual interaction cue. This paper attempted to document this visual language, categorizing the different purposes these cues are designed for, how they are presented within a video game, and also how they are activated. Our idea was to use this to inspire designs for new augmented reality systems as they become more popular.

Our data collection process took us across a bunch of different genres of games, where the crucial purpose was to identify and document different specific examples of visual interaction cues. We spent a long time discussion, organizing, tearing apart, and reorganizing the categories. Ultimately, we landed on a three-axis framework for describing each visual interaction cue we found: purpose, markedness, and trigger.

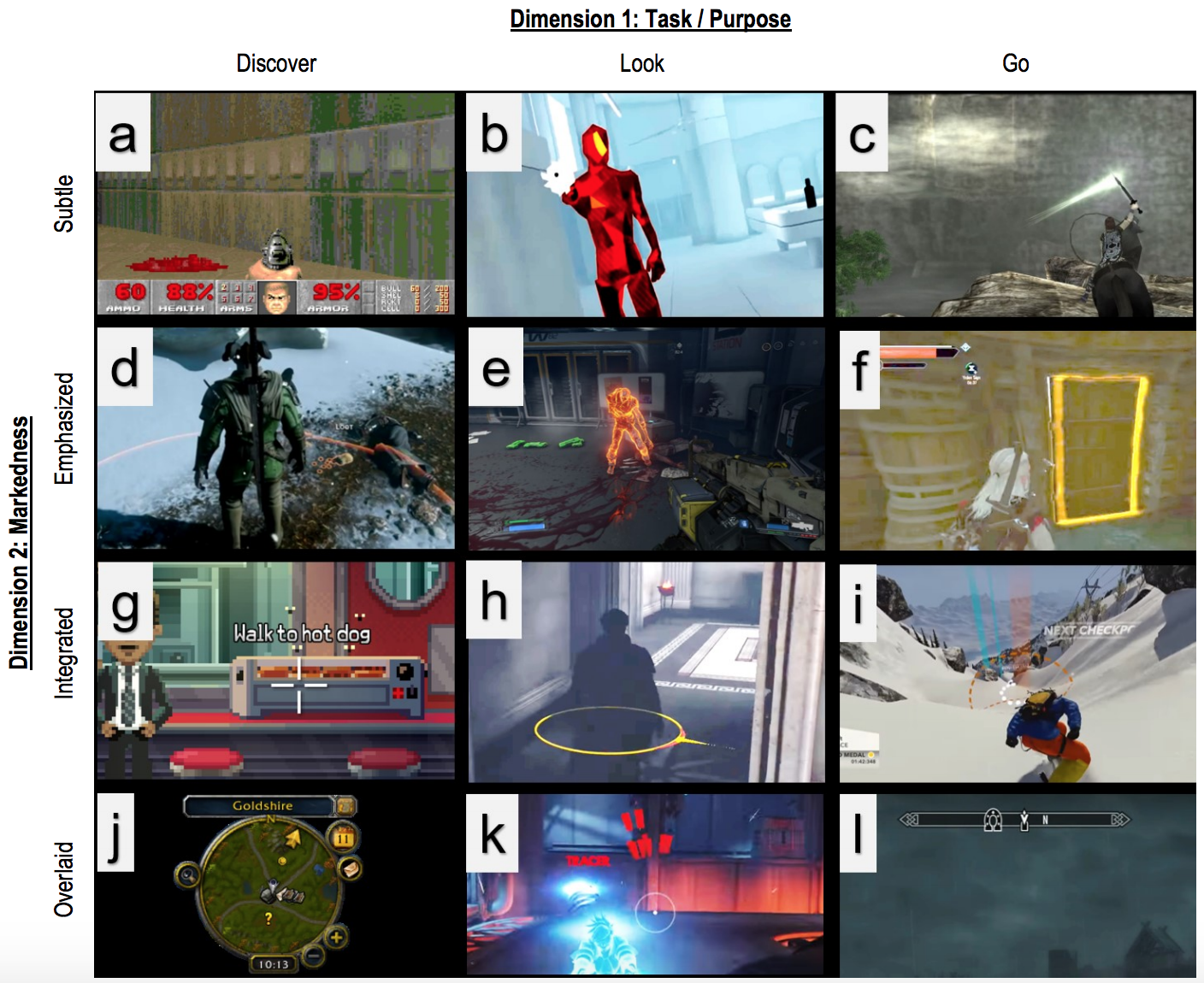

Purpose was broken into three categories: discovery to help the player find or to identify something in the environment; look to help the player see something in a timely manner, and go to help the player get somewhere in the environment.

Markedness was broken into four categories: subtle, where the cue blends into the environment or visual scene seamlessly; emphasized, where the object or surface is highlighted; integrated, where a virtual object (e.g. a 3D arrow) is added to the viewport of the player, and tracks the object; and overlaid, where virtual objects are added atop the viewport, that don’t track the view.

Finally, trigger was broken down into what actually caused the visual trigger.

My favourite image from the paper illustrates the different cues pretty well:

In turn: (a) shows a subtle offset color on the wall to help a player discover a hidden area; (b) shows a flash to tell the player that someone is shooting at him/her; (c) the light shining from the sword tells the player where to go next; (d) the “loot bag” next to the dead body is highlighted to tell the player s/he can interact with it; (e) the enemy glows red suddenly to indicate that the enemy is ready for a timed attack; (f) the door is highlighted to tell the player where to go; (g) the hot dog has a visual annotation to tell the player it can be interacted with; (h) the player has a circular disc drawn to shown the direction of an enemy; (i) added cues show the player where to snowboard to; (j) an overview map shows where different interactable objects or characters are; (k) flares on the reticule show where the player is receiving damage, and (l) the compass on the top shows the player where to go.

While it is probably exhausting to read the above, note that each layer or column of the table is meaningful. Each column shows cues with similar purpose (either to help the player to discover interactable objects, observe timed events, or where to go). Similarly, each row shows a different visual way in which the cues are presented.

I was excited not only to help develop this framework, but also to think about how these cues can be used for the design of future AR systems. Up up and away!

For more information, take a look at:

- the game examples that we found and use in the paper, or

- the CHI 2018 paper that documents the framework.

Here’s the full citation:

Kody Dillman, Terrance Mok, Anthony Tang, Lora Oehlberg, and Alex Mitchell. (2018). A Visual Interaction Cue Framework from Video Game Environments for Augmented Reality. In CHI 2018: Proceedings of the 2018 SIGCHI Conference on Human Factors in Computing Systems, 10. (conference). Acceptance: 25.7% - 667/2595.

Reflection: For me, this was a total passion project. I love the idea of going into worlds that I know and love (i.e. video games), where people have sort of developed a “lore” or “way” of doing things (either consciously or unconsciously), and trying to document this in a way that is useful for something else. Kody’s MSc project had originally began with concrete instantiations of these ideas, but after a time, we realized we needed a more grounded approach for thinking about these cues. Thus, the project was born. Amazingly fun.